Chapter 10 – Introduction to Artificial Neural Networks with Keras

This notebook contains all the sample code and solutions to the exercises in chapter 10.

Run in Google Colab Run in Google Colab

|

Setup

First, let’s import a few common modules, ensure MatplotLib plots figures inline and prepare a function to save the figures. We also check that Python 3.5 or later is installed (although Python 2.x may work, it is deprecated so we strongly recommend you use Python 3 instead), as well as Scikit-Learn ≥0.20 and TensorFlow ≥2.0.

# Python ≥3.5 is required

import sys

assert sys.version_info >= (3, 5)

# Scikit-Learn ≥0.20 is required

import sklearn

assert sklearn.__version__ >= "0.20"

try:

# %tensorflow_version only exists in Colab.

%tensorflow_version 2.x

except Exception:

pass

# TensorFlow ≥2.0 is required

import tensorflow as tf

assert tf.__version__ >= "2.0"

# Common imports

import numpy as np

import os

# to make this notebook's output stable across runs

np.random.seed(42)

# To plot pretty figures

%matplotlib inline

import matplotlib as mpl

import matplotlib.pyplot as plt

mpl.rc('axes', labelsize=14)

mpl.rc('xtick', labelsize=12)

mpl.rc('ytick', labelsize=12)

# Where to save the figures

PROJECT_ROOT_DIR = "."

CHAPTER_ID = "ann"

IMAGES_PATH = os.path.join(PROJECT_ROOT_DIR, "images", CHAPTER_ID)

os.makedirs(IMAGES_PATH, exist_ok=True)

def save_fig(fig_id, tight_layout=True, fig_extension="png", resolution=300):

path = os.path.join(IMAGES_PATH, fig_id + "." + fig_extension)

print("Saving figure", fig_id)

if tight_layout:

plt.tight_layout()

plt.savefig(path, format=fig_extension, dpi=resolution)

# Ignore useless warnings (see SciPy issue #5998)

import warnings

warnings.filterwarnings(action="ignore", message="^internal gelsd")

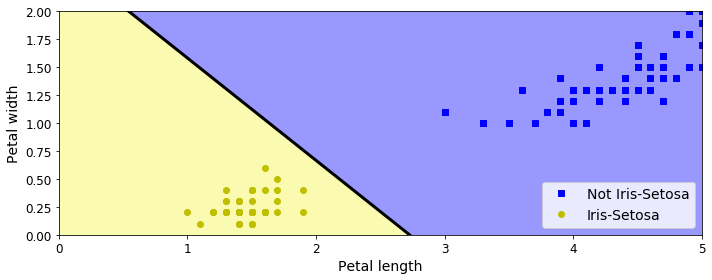

Perceptrons

Note: we set max_iter and tol explicitly to avoid warnings about the fact that their default value will change in future versions of Scikit-Learn.

import numpy as np

from sklearn.datasets import load_iris

from sklearn.linear_model import Perceptron

iris = load_iris()

X = iris.data[:, (2, 3)] # petal length, petal width

y = (iris.target == 0).astype(np.int)

per_clf = Perceptron(max_iter=1000, tol=1e-3, random_state=42)

per_clf.fit(X, y)

y_pred = per_clf.predict([[2, 0.5]])

y_pred

a = -per_clf.coef_[0][0] / per_clf.coef_[0][1]

b = -per_clf.intercept_ / per_clf.coef_[0][1]

axes = [0, 5, 0, 2]

x0, x1 = np.meshgrid(

np.linspace(axes[0], axes[1], 500).reshape(-1, 1),

np.linspace(axes[2], axes[3], 200).reshape(-1, 1),

)

X_new = np.c_[x0.ravel(), x1.ravel()]

y_predict = per_clf.predict(X_new)

zz = y_predict.reshape(x0.shape)

plt.figure(figsize=(10, 4))

plt.plot(X[y==0, 0], X[y==0, 1], "bs", label="Not Iris-Setosa")

plt.plot(X[y==1, 0], X[y==1, 1], "yo", label="Iris-Setosa")

plt.plot([axes[0], axes[1]], [a * axes[0] + b, a * axes[1] + b], "k-", linewidth=3)

from matplotlib.colors import ListedColormap

custom_cmap = ListedColormap(['#9898ff', '#fafab0'])

plt.contourf(x0, x1, zz, cmap=custom_cmap)

plt.xlabel("Petal length", fontsize=14)

plt.ylabel("Petal width", fontsize=14)

plt.legend(loc="lower right", fontsize=14)

plt.axis(axes)

save_fig("perceptron_iris_plot")

plt.show()

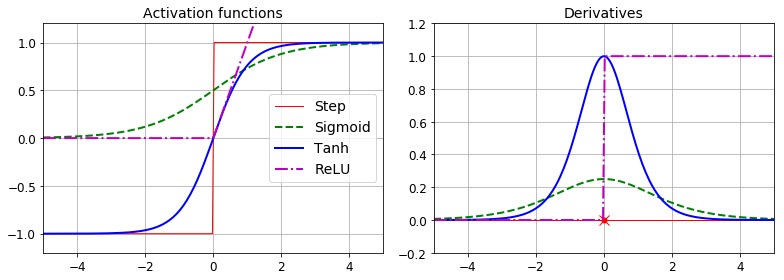

Activation functions

def sigmoid(z):

return 1 / (1 + np.exp(-z))

def relu(z):

return np.maximum(0, z)

def derivative(f, z, eps=0.000001):

return (f(z + eps) - f(z - eps))/(2 * eps)

z = np.linspace(-5, 5, 200)

plt.figure(figsize=(11,4))

plt.subplot(121)

plt.plot(z, np.sign(z), "r-", linewidth=1, label="Step")

plt.plot(z, sigmoid(z), "g--", linewidth=2, label="Sigmoid")

plt.plot(z, np.tanh(z), "b-", linewidth=2, label="Tanh")

plt.plot(z, relu(z), "m-.", linewidth=2, label="ReLU")

plt.grid(True)

plt.legend(loc="center right", fontsize=14)

plt.title("Activation functions", fontsize=14)

plt.axis([-5, 5, -1.2, 1.2])

plt.subplot(122)

plt.plot(z, derivative(np.sign, z), "r-", linewidth=1, label="Step")

plt.plot(0, 0, "ro", markersize=5)

plt.plot(0, 0, "rx", markersize=10)

plt.plot(z, derivative(sigmoid, z), "g--", linewidth=2, label="Sigmoid")

plt.plot(z, derivative(np.tanh, z), "b-", linewidth=2, label="Tanh")

plt.plot(z, derivative(relu, z), "m-.", linewidth=2, label="ReLU")

plt.grid(True)

#plt.legend(loc="center right", fontsize=14)

plt.title("Derivatives", fontsize=14)

plt.axis([-5, 5, -0.2, 1.2])

save_fig("activation_functions_plot")

plt.show()

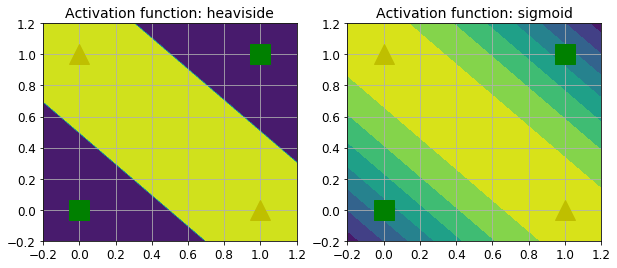

def heaviside(z):

return (z >= 0).astype(z.dtype)

def mlp_xor(x1, x2, activation=heaviside):

return activation(-activation(x1 + x2 - 1.5) + activation(x1 + x2 - 0.5) - 0.5)

x1s = np.linspace(-0.2, 1.2, 100)

x2s = np.linspace(-0.2, 1.2, 100)

x1, x2 = np.meshgrid(x1s, x2s)

z1 = mlp_xor(x1, x2, activation=heaviside)

z2 = mlp_xor(x1, x2, activation=sigmoid)

plt.figure(figsize=(10,4))

plt.subplot(121)

plt.contourf(x1, x2, z1)

plt.plot([0, 1], [0, 1], "gs", markersize=20)

plt.plot([0, 1], [1, 0], "y^", markersize=20)

plt.title("Activation function: heaviside", fontsize=14)

plt.grid(True)

plt.subplot(122)

plt.contourf(x1, x2, z2)

plt.plot([0, 1], [0, 1], "gs", markersize=20)

plt.plot([0, 1], [1, 0], "y^", markersize=20)

plt.title("Activation function: sigmoid", fontsize=14)

plt.grid(True)

Building an Image Classifier

First let’s import TensorFlow and Keras.

import tensorflow as tf

from tensorflow import keras

tf.__version__

keras.__version__

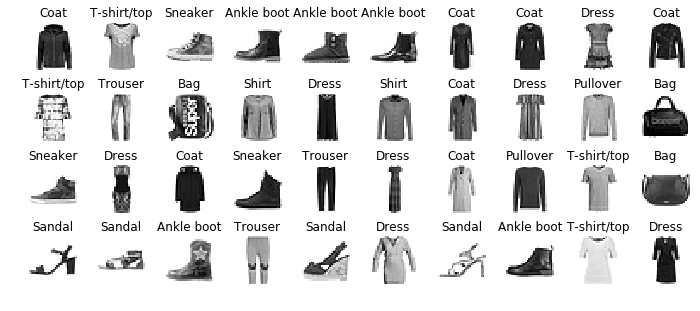

Let’s start by loading the fashion MNIST dataset. Keras has a number of functions to load popular datasets in keras.datasets. The dataset is already split for you between a training set and a test set, but it can be useful to split the training set further to have a validation set:

fashion_mnist = keras.datasets.fashion_mnist

(X_train_full, y_train_full), (X_test, y_test) = fashion_mnist.load_data()

The training set contains 60,000 grayscale images, each 28x28 pixels:

X_train_full.shape

Each pixel intensity is represented as a byte (0 to 255):

X_train_full.dtype

Let’s split the full training set into a validation set and a (smaller) training set. We also scale the pixel intensities down to the 0-1 range and convert them to floats, by dividing by 255.

X_valid, X_train = X_train_full[:5000] / 255., X_train_full[5000:] / 255.

y_valid, y_train = y_train_full[:5000], y_train_full[5000:]

X_test = X_test / 255.

You can plot an image using Matplotlib’s imshow() function, with a 'binary'

color map:

plt.imshow(X_train[0], cmap="binary")

plt.axis('off')

plt.show()

The labels are the class IDs (represented as uint8), from 0 to 9:

y_train

Here are the corresponding class names:

class_names = ["T-shirt/top", "Trouser", "Pullover", "Dress", "Coat",

"Sandal", "Shirt", "Sneaker", "Bag", "Ankle boot"]

So the first image in the training set is a coat:

class_names[y_train[0]]

The validation set contains 5,000 images, and the test set contains 10,000 images:

X_valid.shape

X_test.shape

Let’s take a look at a sample of the images in the dataset:

n_rows = 4

n_cols = 10

plt.figure(figsize=(n_cols * 1.2, n_rows * 1.2))

for row in range(n_rows):

for col in range(n_cols):

index = n_cols * row + col

plt.subplot(n_rows, n_cols, index + 1)

plt.imshow(X_train[index], cmap="binary", interpolation="nearest")

plt.axis('off')

plt.title(class_names[y_train[index]], fontsize=12)

plt.subplots_adjust(wspace=0.2, hspace=0.5)

save_fig('fashion_mnist_plot', tight_layout=False)

plt.show()

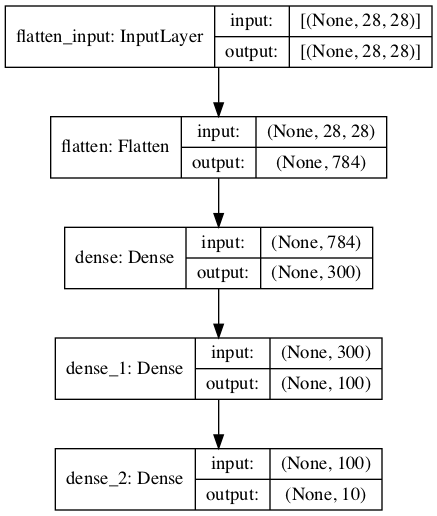

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

model.add(keras.layers.Dense(300, activation="relu"))

model.add(keras.layers.Dense(100, activation="relu"))

model.add(keras.layers.Dense(10, activation="softmax"))

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

model = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28]),

keras.layers.Dense(300, activation="relu"),

keras.layers.Dense(100, activation="relu"),

keras.layers.Dense(10, activation="softmax")

])

model.layers

model.summary()

keras.utils.plot_model(model, "my_mnist_model.png", show_shapes=True)

hidden1 = model.layers[1]

hidden1.name

model.get_layer(hidden1.name) is hidden1

weights, biases = hidden1.get_weights()

weights

weights.shape

biases

biases.shape

model.compile(loss="sparse_categorical_crossentropy",

optimizer="sgd",

metrics=["accuracy"])

This is equivalent to:

model.compile(loss=keras.losses.sparse_categorical_crossentropy,

optimizer=keras.optimizers.SGD(),

metrics=[keras.metrics.sparse_categorical_accuracy])

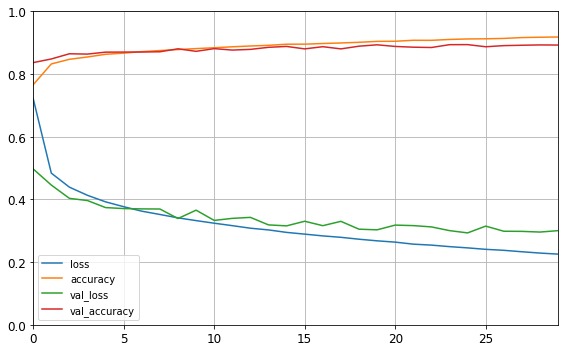

history = model.fit(X_train, y_train, epochs=30,

validation_data=(X_valid, y_valid))

history.params

print(history.epoch)

history.history.keys()

import pandas as pd

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.gca().set_ylim(0, 1)

save_fig("keras_learning_curves_plot")

plt.show()

model.evaluate(X_test, y_test)

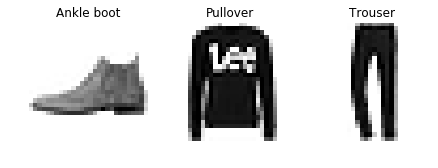

X_new = X_test[:3]

y_proba = model.predict(X_new)

y_proba.round(2)

y_pred = model.predict_classes(X_new)

y_pred

np.array(class_names)[y_pred]

y_new = y_test[:3]

y_new

plt.figure(figsize=(7.2, 2.4))

for index, image in enumerate(X_new):

plt.subplot(1, 3, index + 1)

plt.imshow(image, cmap="binary", interpolation="nearest")

plt.axis('off')

plt.title(class_names[y_test[index]], fontsize=12)

plt.subplots_adjust(wspace=0.2, hspace=0.5)

save_fig('fashion_mnist_images_plot', tight_layout=False)

plt.show()

Regression MLP

Let’s load, split and scale the California housing dataset (the original one, not the modified one as in chapter 2):

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

housing = fetch_california_housing()

X_train_full, X_test, y_train_full, y_test = train_test_split(housing.data, housing.target, random_state=42)

X_train, X_valid, y_train, y_valid = train_test_split(X_train_full, y_train_full, random_state=42)

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_valid = scaler.transform(X_valid)

X_test = scaler.transform(X_test)

np.random.seed(42)

tf.random.set_seed(42)

model = keras.models.Sequential([

keras.layers.Dense(30, activation="relu", input_shape=X_train.shape[1:]),

keras.layers.Dense(1)

])

model.compile(loss="mean_squared_error", optimizer=keras.optimizers.SGD(lr=1e-3))

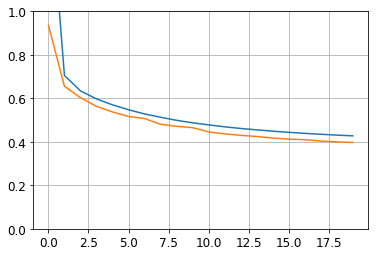

history = model.fit(X_train, y_train, epochs=20, validation_data=(X_valid, y_valid))

mse_test = model.evaluate(X_test, y_test)

X_new = X_test[:3]

y_pred = model.predict(X_new)

plt.plot(pd.DataFrame(history.history))

plt.grid(True)

plt.gca().set_ylim(0, 1)

plt.show()

y_pred

Functional API

Not all neural network models are simply sequential. Some may have complex topologies. Some may have multiple inputs and/or multiple outputs. For example, a Wide & Deep neural network (see paper) connects all or part of the inputs directly to the output layer.

np.random.seed(42)

tf.random.set_seed(42)

input_ = keras.layers.Input(shape=X_train.shape[1:])

hidden1 = keras.layers.Dense(30, activation="relu")(input_)

hidden2 = keras.layers.Dense(30, activation="relu")(hidden1)

concat = keras.layers.concatenate([input_, hidden2])

output = keras.layers.Dense(1)(concat)

model = keras.models.Model(inputs=[input_], outputs=[output])

model.summary()

model.compile(loss="mean_squared_error", optimizer=keras.optimizers.SGD(lr=1e-3))

history = model.fit(X_train, y_train, epochs=20,

validation_data=(X_valid, y_valid))

mse_test = model.evaluate(X_test, y_test)

y_pred = model.predict(X_new)

What if you want to send different subsets of input features through the wide or deep paths? We will send 5 features (features 0 to 4), and 6 through the deep path (features 2 to 7). Note that 3 features will go through both (features 2, 3 and 4).

np.random.seed(42)

tf.random.set_seed(42)

input_A = keras.layers.Input(shape=[5], name="wide_input")

input_B = keras.layers.Input(shape=[6], name="deep_input")

hidden1 = keras.layers.Dense(30, activation="relu")(input_B)

hidden2 = keras.layers.Dense(30, activation="relu")(hidden1)

concat = keras.layers.concatenate([input_A, hidden2])

output = keras.layers.Dense(1, name="output")(concat)

model = keras.models.Model(inputs=[input_A, input_B], outputs=[output])

model.compile(loss="mse", optimizer=keras.optimizers.SGD(lr=1e-3))

X_train_A, X_train_B = X_train[:, :5], X_train[:, 2:]

X_valid_A, X_valid_B = X_valid[:, :5], X_valid[:, 2:]

X_test_A, X_test_B = X_test[:, :5], X_test[:, 2:]

X_new_A, X_new_B = X_test_A[:3], X_test_B[:3]

history = model.fit((X_train_A, X_train_B), y_train, epochs=20,

validation_data=((X_valid_A, X_valid_B), y_valid))

mse_test = model.evaluate((X_test_A, X_test_B), y_test)

y_pred = model.predict((X_new_A, X_new_B))

Adding an auxiliary output for regularization:

np.random.seed(42)

tf.random.set_seed(42)

input_A = keras.layers.Input(shape=[5], name="wide_input")

input_B = keras.layers.Input(shape=[6], name="deep_input")

hidden1 = keras.layers.Dense(30, activation="relu")(input_B)

hidden2 = keras.layers.Dense(30, activation="relu")(hidden1)

concat = keras.layers.concatenate([input_A, hidden2])

output = keras.layers.Dense(1, name="main_output")(concat)

aux_output = keras.layers.Dense(1, name="aux_output")(hidden2)

model = keras.models.Model(inputs=[input_A, input_B],

outputs=[output, aux_output])

model.compile(loss=["mse", "mse"], loss_weights=[0.9, 0.1], optimizer=keras.optimizers.SGD(lr=1e-3))

history = model.fit([X_train_A, X_train_B], [y_train, y_train], epochs=20,

validation_data=([X_valid_A, X_valid_B], [y_valid, y_valid]))

total_loss, main_loss, aux_loss = model.evaluate(

[X_test_A, X_test_B], [y_test, y_test])

y_pred_main, y_pred_aux = model.predict([X_new_A, X_new_B])

The subclassing API

class WideAndDeepModel(keras.models.Model):

def __init__(self, units=30, activation="relu", **kwargs):

super().__init__(**kwargs)

self.hidden1 = keras.layers.Dense(units, activation=activation)

self.hidden2 = keras.layers.Dense(units, activation=activation)

self.main_output = keras.layers.Dense(1)

self.aux_output = keras.layers.Dense(1)

def call(self, inputs):

input_A, input_B = inputs

hidden1 = self.hidden1(input_B)

hidden2 = self.hidden2(hidden1)

concat = keras.layers.concatenate([input_A, hidden2])

main_output = self.main_output(concat)

aux_output = self.aux_output(hidden2)

return main_output, aux_output

model = WideAndDeepModel(30, activation="relu")

model.compile(loss="mse", loss_weights=[0.9, 0.1], optimizer=keras.optimizers.SGD(lr=1e-3))

history = model.fit((X_train_A, X_train_B), (y_train, y_train), epochs=10,

validation_data=((X_valid_A, X_valid_B), (y_valid, y_valid)))

total_loss, main_loss, aux_loss = model.evaluate((X_test_A, X_test_B), (y_test, y_test))

y_pred_main, y_pred_aux = model.predict((X_new_A, X_new_B))

model = WideAndDeepModel(30, activation="relu")

Saving and Restoring

np.random.seed(42)

tf.random.set_seed(42)

model = keras.models.Sequential([

keras.layers.Dense(30, activation="relu", input_shape=[8]),

keras.layers.Dense(30, activation="relu"),

keras.layers.Dense(1)

])

model.compile(loss="mse", optimizer=keras.optimizers.SGD(lr=1e-3))

history = model.fit(X_train, y_train, epochs=10, validation_data=(X_valid, y_valid))

mse_test = model.evaluate(X_test, y_test)

model.save("my_keras_model.h5")

model = keras.models.load_model("my_keras_model.h5")

model.predict(X_new)

model.save_weights("my_keras_weights.ckpt")

model.load_weights("my_keras_weights.ckpt")

Using Callbacks during Training

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

model = keras.models.Sequential([

keras.layers.Dense(30, activation="relu", input_shape=[8]),

keras.layers.Dense(30, activation="relu"),

keras.layers.Dense(1)

])

model.compile(loss="mse", optimizer=keras.optimizers.SGD(lr=1e-3))

checkpoint_cb = keras.callbacks.ModelCheckpoint("my_keras_model.h5", save_best_only=True)

history = model.fit(X_train, y_train, epochs=10,

validation_data=(X_valid, y_valid),

callbacks=[checkpoint_cb])

model = keras.models.load_model("my_keras_model.h5") # rollback to best model

mse_test = model.evaluate(X_test, y_test)

model.compile(loss="mse", optimizer=keras.optimizers.SGD(lr=1e-3))

early_stopping_cb = keras.callbacks.EarlyStopping(patience=10,

restore_best_weights=True)

history = model.fit(X_train, y_train, epochs=100,

validation_data=(X_valid, y_valid),

callbacks=[checkpoint_cb, early_stopping_cb])

mse_test = model.evaluate(X_test, y_test)

class PrintValTrainRatioCallback(keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs):

print("\nval/train: {:.2f}".format(logs["val_loss"] / logs["loss"]))

val_train_ratio_cb = PrintValTrainRatioCallback()

history = model.fit(X_train, y_train, epochs=1,

validation_data=(X_valid, y_valid),

callbacks=[val_train_ratio_cb])

TensorBoard

root_logdir = os.path.join(os.curdir, "my_logs")

def get_run_logdir():

import time

run_id = time.strftime("run_%Y_%m_%d-%H_%M_%S")

return os.path.join(root_logdir, run_id)

run_logdir = get_run_logdir()

run_logdir

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

model = keras.models.Sequential([

keras.layers.Dense(30, activation="relu", input_shape=[8]),

keras.layers.Dense(30, activation="relu"),

keras.layers.Dense(1)

])

model.compile(loss="mse", optimizer=keras.optimizers.SGD(lr=1e-3))

tensorboard_cb = keras.callbacks.TensorBoard(run_logdir)

history = model.fit(X_train, y_train, epochs=30,

validation_data=(X_valid, y_valid),

callbacks=[checkpoint_cb, tensorboard_cb])

To start the TensorBoard server, one option is to open a terminal, if needed activate the virtualenv where you installed TensorBoard, go to this notebook’s directory, then type:

$ tensorboard --logdir=./my_logs --port=6006

You can then open your web browser to localhost:6006 and use TensorBoard. Once you are done, press Ctrl-C in the terminal window, this will shutdown the TensorBoard server.

Alternatively, you can load TensorBoard’s Jupyter extension and run it like this:

%load_ext tensorboard

%tensorboard --logdir=./my_logs --port=6006

run_logdir2 = get_run_logdir()

run_logdir2

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

model = keras.models.Sequential([

keras.layers.Dense(30, activation="relu", input_shape=[8]),

keras.layers.Dense(30, activation="relu"),

keras.layers.Dense(1)

])

model.compile(loss="mse", optimizer=keras.optimizers.SGD(lr=0.05))

tensorboard_cb = keras.callbacks.TensorBoard(run_logdir2)

history = model.fit(X_train, y_train, epochs=30,

validation_data=(X_valid, y_valid),

callbacks=[checkpoint_cb, tensorboard_cb])

Notice how TensorBoard now sees two runs, and you can compare the learning curves.

Check out the other available logging options:

help(keras.callbacks.TensorBoard.__init__)

Hyperparameter Tuning

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

def build_model(n_hidden=1, n_neurons=30, learning_rate=3e-3, input_shape=[8]):

model = keras.models.Sequential()

model.add(keras.layers.InputLayer(input_shape=input_shape))

for layer in range(n_hidden):

model.add(keras.layers.Dense(n_neurons, activation="relu"))

model.add(keras.layers.Dense(1))

optimizer = keras.optimizers.SGD(lr=learning_rate)

model.compile(loss="mse", optimizer=optimizer)

return model

keras_reg = keras.wrappers.scikit_learn.KerasRegressor(build_model)

keras_reg.fit(X_train, y_train, epochs=100,

validation_data=(X_valid, y_valid),

callbacks=[keras.callbacks.EarlyStopping(patience=10)])

mse_test = keras_reg.score(X_test, y_test)

y_pred = keras_reg.predict(X_new)

np.random.seed(42)

tf.random.set_seed(42)

from scipy.stats import reciprocal

from sklearn.model_selection import RandomizedSearchCV

param_distribs = {

"n_hidden": [0, 1, 2, 3],

"n_neurons": np.arange(1, 100),

"learning_rate": reciprocal(3e-4, 3e-2),

}

rnd_search_cv = RandomizedSearchCV(keras_reg, param_distribs, n_iter=10, cv=3, verbose=2)

rnd_search_cv.fit(X_train, y_train, epochs=100,

validation_data=(X_valid, y_valid),

callbacks=[keras.callbacks.EarlyStopping(patience=10)])

rnd_search_cv.best_params_

rnd_search_cv.best_score_

rnd_search_cv.best_estimator_

rnd_search_cv.score(X_test, y_test)

model = rnd_search_cv.best_estimator_.model

model

model.evaluate(X_test, y_test)

Exercise solutions

1. to 9.

See appendix A.

10.

TODO